Will AI Replace Human Oversight in Research too? AI and the future of ethical review in clinical research have long been at the center of heated debate. But before we take sides, let’s break it down. Here are the key facts and figures—raw and unfiltered—for your human wisdom to decide. After all, AI didn’t just appear out of nowhere; we built it. The real question is, can we still control it?

The Disruption Has Begun

Are we ready to trust artificial intelligence with the ethical backbone of clinical research? Can a machine truly navigate the complex web of human morality, patient safety, and regulatory compliance? If AI is stepping into the realm of ethical review, what happens when algorithms make the final call on a life-altering medical trial? These are not hypothetical questions—they are the future staring us in the face.

A recent study, “Ethical Review of Clinical Research with Generative AI: Evaluating ChatGPT’s Accuracy and Reproducibility,” reveals that AI models like OpenAI’s GPT-4 and GPT-4o are not just assisting in ethical reviews; they are outperforming traditional human reviewers in speed, accuracy, and consistency. But should that comfort us—or worry us?

Why Ethical Reviews Are Broken—and AI Wants to Fix Them

Institutional Review Boards (IRBs) in the U.S. and Certified Review Boards (CRBs) in Japan play a critical role in safeguarding patient welfare and ensuring research integrity. However, studies have highlighted challenges in their review processes, including variability in review quality, inconsistencies in revision instructions, and delays in approval times.

- Inconsistency: Ethical reviews vary wildly between boards, leaving room for subjectivity and bias.

- Bureaucratic Gridlock: Endless paperwork and slow decision-making delay crucial medical advancements.

- Expert Shortages: There simply aren’t enough trained professionals to meet the growing demand for clinical trial evaluations.

The promise of AI? A system that removes human error, processes vast amounts of data instantly, and delivers unbiased, reproducible results. But is AI truly unbiased? And what happens when it fails?

The AI Experiment: What We Learned from the Study

To see if AI is ready to handle clinical research ethics, researchers put GPT-4 and GPT-4o to the test, asking them to analyze Japanese-language clinical research protocols and informed consent forms. Their mission:

- Extract research objectives with precision.

- Assess study design with 100% accuracy.

- Evaluate ethical considerations in a way that aligns with human standards.

Then came the ultimate challenge: comparing AI’s decisions with those of human reviewers.

The Shocking Results: AI Is Winning

1. AI Outperforms Humans—But at What Cost?

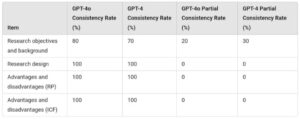

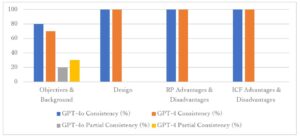

- GPT-4o nailed research design assessments with 100% accuracy, surpassing human consistency.

- It identified research objectives correctly 80% of the time, a rate that would make most human reviewers envious.

Key Notes:

– *RP:* Research protocol

– *ICF:* Informed consent form

– *Accuracy Evaluation:* The consistency rate represents the percentage of outputs that perfectly matched the research protocol, while the partial consistency rate indicates cases where the main information was mostly consistent. (Based on the results of 10 trials).

But what happens in the 20% of cases where AI gets it wrong? If a machine misinterprets a protocol, does it jeopardize patient safety?

2. Customization Makes AI Even More Dangerous (or Powerful)

- AI models using custom prompts outperformed standard ones, proving that fine-tuning AI makes it dramatically more effective.

- Retrieval-Augmented Generation (RAG) techniques improved AI’s ability to handle complex ethical reviews.

But who controls the fine-tuning? If an AI is optimized by a private entity, could ethical reviews be gamed for corporate gain?

3. Reproducibility: AI’s Biggest Strength or Its Greatest Flaw?

- Unlike humans, AI doesn’t have good days and bad days—it is consistently reproducible.

- But does that mean it will consistently amplify biases baked into its training data?

Case Study: AI vs. Human Review in Clinical Ethics

The study featured a real-world evaluation of AI’s effectiveness in analyzing a Phase II clinical trial for trastuzumab biosimilars in patients with advanced solid tumors. Researchers tested AI’s ability to extract critical components such as patient eligibility, study design, and potential risks.

Key Takeaways:

- AI successfully identified key elements with 100% accuracy in research design, proving its efficiency in structured data extraction.

- However, AI struggled with nuanced terminology, occasionally misinterpreting medical terms such as “trastuzumab BS” vs. “trastuzumab.”

- Standardized document formatting greatly improved AI performance, reinforcing the need for structured input.

This case study underscores AI’s potential but also its limitations—particularly in handling unstructured or highly technical content that requires human expertise.

The Uncomfortable Truth: AI Isn’t Perfect, and That’s a Problem

AI’s rise in ethical reviews raises disturbing questions:

- Data Security: Research protocols contain confidential data. Can we trust AI systems to handle this information responsibly?

- Loss of Human Nuance: Ethical reviews require context, emotion, and moral reasoning—qualities AI lacks.

- The Standardization Dilemma: AI struggles with unstructured documents. Does this mean researchers must conform to AI, rather than AI adapting to them?

The Future: AI Will Take Over Ethical Reviews—But Should It?

If current trends continue, AI-assisted ethical reviews will become the industry standard within the next decade. Here’s what that means:

- Faster Approvals: Medical breakthroughs won’t be delayed by bureaucracy.

- Stronger Standardization: Ethics committees will no longer issue contradictory rulings.

- Reduced Expert Workload: Humans will focus on nuanced ethical dilemmas while AI handles the repetitive tasks.

But who will oversee AI? If regulators don’t act now, will AI-driven ethical reviews become the new black box, making decisions we don’t fully understand?

The Hardest Questions We Can’t Ignore

Artificial intelligence is already reshaping clinical research ethics, offering unprecedented speed, consistency, and efficiency. But before we surrender our ethical review process to an algorithm, we must ask:

- Who holds AI accountable for ethical missteps?

- How do we ensure AI doesn’t reinforce systemic biases?

- Can we build safeguards that prevent AI from making dangerous calls on clinical trials?

The future is no longer a distant possibility—it’s here. AI isn’t coming for ethical review boards. It has already arrived. The only question that remains: Do we control AI, or does it control us?